Event Details:

Neural networks and the brain: from the reina to semantic cognition

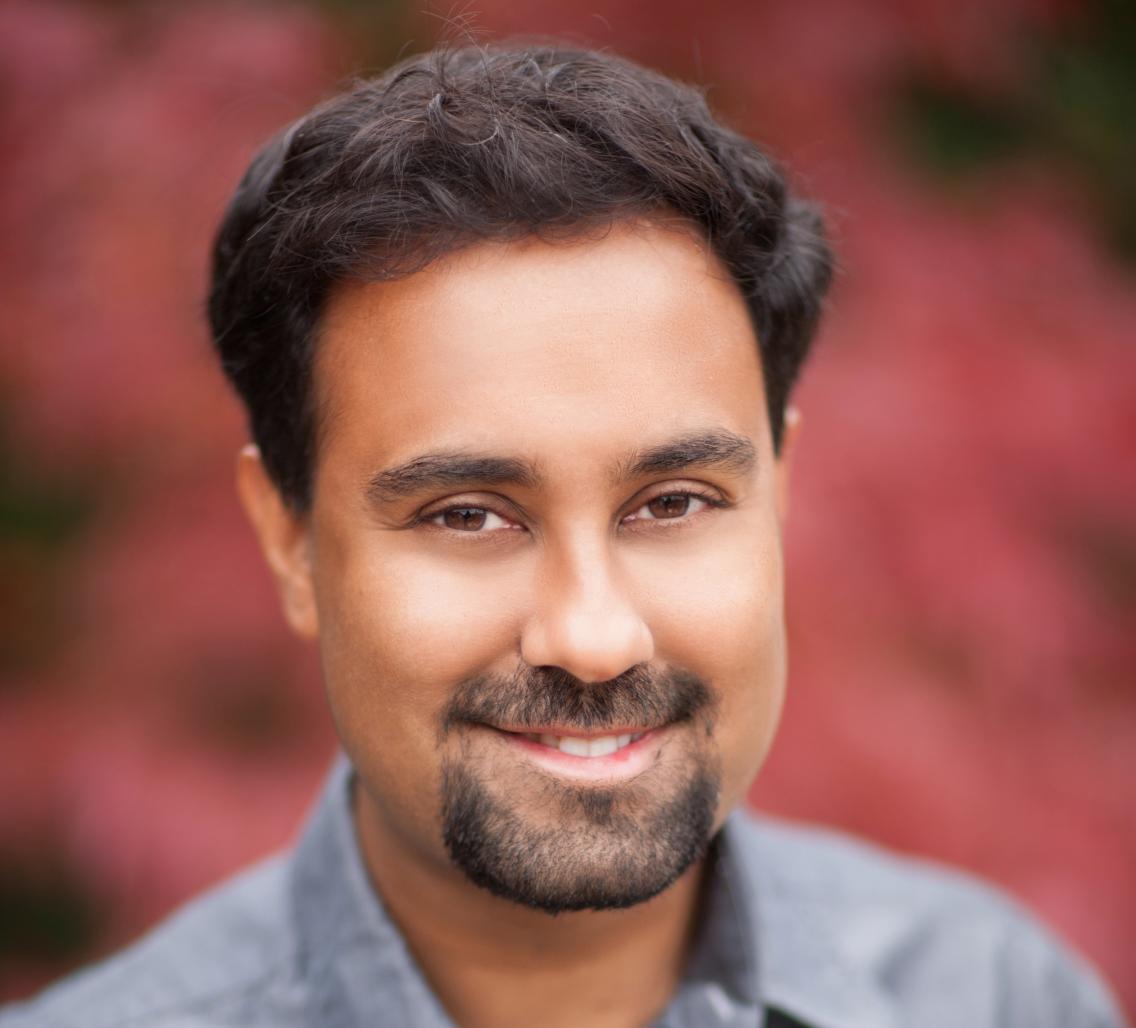

Surya Ganguli

Assistant Professor

Department of Applied Physics

Stanford University

Abstract

A synthesis of machine learning, neuroscience and psychology has the potential to elucidate how striking computations emerge from the interactions of neurons and synapses, with applications to biological and artificial neural networks alike. We discuss two vignettes along these lines. First we demonstrate that modern deep learning methods yield state-of-the-art models of the retina that predict the retinal response to natural scenes with high precision, and recapitulate both the functional properties of the retinal interior and decades of physiological studies. Second, we review work from Jay McClelland and collaborators on how deep neural networks can describe a wide array of psychology experiments about the developmental time course of infant semantic cognition, including the hierarchical differentiation of concepts as infants get older. We describe a mathematical analysis that not only provides a natural explanation for the dynamics of human semantic development, but also leads to better algorithms for speeding up learning in artificial neural networks.

Bio

Surya Ganguli triple majored in physics, mathematics, and electrical engineering and computer science at the Massachusetts Institute of Technology, completed a PhD in string theory at the University of California, Berkeley, and a postdoc in theoretical neuroscience at the University of California, San Francisco. He is now an assistant professor of applied physics at Stanford University, where he leads the Neural Dynamics and Computation Lab, and is also a consulting professor at the Google Brain research team. His research spans the fields of neuroscience, machine learning and physics, focusing on understanding and improving how both biological and artificial neural networks learn striking emergent computations. He has been awarded a Swartz-Fellowship in computational neuroscience, a Burroughs Wellcome Fund Career Award at the Scientific Interface, a Terman Award, a NIPS Outstanding Paper Award, an Sloan Research Fellowship, a James S. McDonnell Foundation Scholar Award in Understanding Human Cognition, a McKnight Scholar Award, and a Simons Investigator award in the mathematical modeling of living systems.