The co-evolution of neuroscience and AI

ChatGPT wouldn’t exist without neuroscience. Nor would the computer vision systems that help driverless cars cruise over the hills of San Francisco and iPhones unlock at a glance, nor the speech recognition technologies that enable Siri and Alexa to respond to our commands. All of these AI tools are grounded in computational structures called neural networks—which did not acquire their name by mere coincidence.

“There’s no doubt that neuroscience influenced the building of neural networks,” said Dan Yamins, a faculty scholar at the Wu Tsai Neurosciences Institute and associate professor of psychology and computer science at Stanford, during a fireside chat in October.

The chat—moderated by Lisa Giocomo, a professor of neurobiology and deputy director of Wu Tsai Neuro—rounded out the Institute’s day-long Brains and Machines Symposium, where experts from Stanford and around the world shared work at the intersection of neuroscience and artificial intelligence.

Giocomo guided the conversation through tricky waters, and the panelists discussed—and disagreed about—both the role that neuroscience will have in inspiring future AI systems and the role universities will play in making those systems a reality. But though the implications of academic neuroscience for future AI might be up for debate, the panel also found a major point of agreement: there is huge scientific progress to be made by applying AI tools to neuroscientific questions, and universities are where much of that work will happen.

“Even if it turned out that you didn’t really need to do neuroscience at this point to get inspiration [for AI systems], doing neuroscience and having models of the brain is incredibly important in its own right,” Yamins said. “There’s just no route between AI and helping solve diseases of the brain that doesn’t go through neuroscience.”

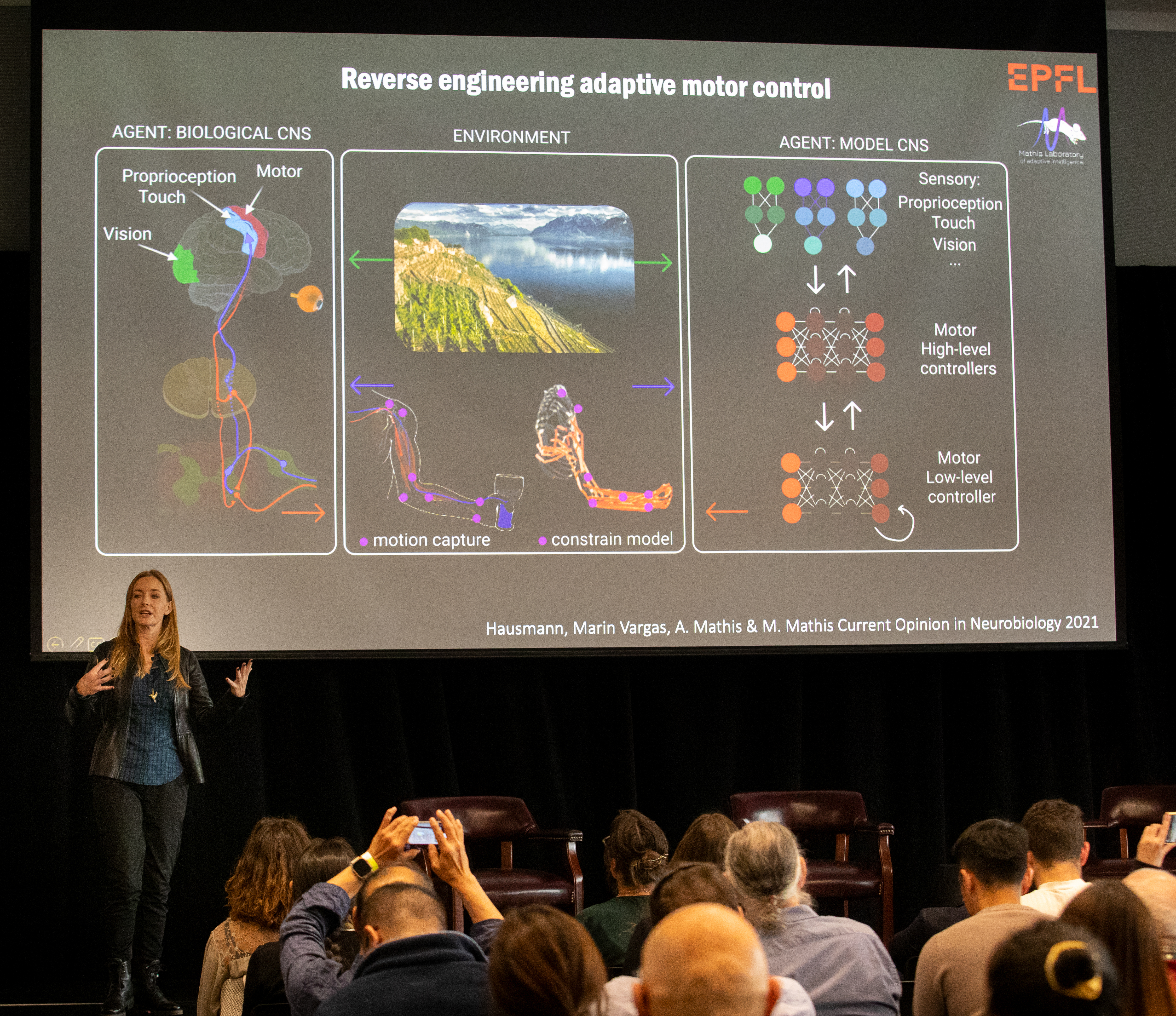

If the Brains and Machines Symposium was intended as an argument for AI’s potential as a neuroscientific tool, it was a powerful one. Andreas Tolias, professor of ophthalmology at Stanford, showed how neural network “twins” of mammalian visual systems can help uncover key principles of how those systems work. Mackenzie Mathis, assistant professor at the École Polytechnique Fédérale de Lausanne, showed in mice how AI systems can help scientists understand the brain’s control of movement by automatically detecting where body parts are during a neural recording. And at the fireside chat, Laura Gwilliams, a faculty scholar at Wu Tsai Neuro and Stanford Data Science and an assistant professor of psychology, spoke of using large language models “essentially as animal models” for studying language, which can’t be researched in mice or monkeys.

There’s an appealing symmetry to all of this work: the AI systems these scientists are using have deep roots in neuroscience. Perceptrons, the biologically inspired building blocks of neural networks, have been around since the 1950s, and visual system–inspired convolutional neural networks were first piloted in 1969. But whether and how current-day neuroscience will continue to inform AI development remains an open question.

Yamins, for one, argued that AI algorithms don’t need to be modeled on the latest insights from neuroscience — artificial systems don’t need to directly model biological intelligence to function well, so the fields should be able to evolve independently. His argument has some major recent AI developments on its side: transformer neural networks, which were introduced in 2017 and power large-language models like GPT, don’t have any obvious resemblance to brain networks, although they do trace their genealogy to attention research in psychology.

But James Landay, the Denning Co-Director and Senior Fellow at Stanford HAI and the Anand Rajaraman and Venky Harinarayan Professor of Computer Science, voiced a very different perspective during the fireside chat. As effective as transformer-based tools like GPT may be at writing code and planning travel itineraries, they also require gargantuan amounts of energy to run. That does real harm to the planet. It’s in solving this problem that Landay sees a potential role for neuroscience in inspiring AI development.

“The brain is able to do all of this stuff with 20 watts,” Landay says. “Can we learn from that and come up with new algorithms, which there’s clearly a need for, for our planet’s future?”

Large language models also have an insatiable appetite for data, to the point that some experts think they could run out of training data in just a few years. Here, too, neuroscience could make a difference, according to Surya Ganguli, an associate professor of applied physics and HAI senior fellow affiliated with Wu Tsai Neuro.

“There’s a lot of work from psychology and neuroscience that could seep into AI to make it more data efficient,” Ganguli said in a recent episode of Wu Tsai Neuro’s podcast, From Our Neurons to Yours. Unlike large language models, which gobble up often-redundant web scrapings, humans actively seek out novel data by, say, reading books on unfamiliar topics or asking questions when they don’t understand a concept. “We haven’t quite gotten active learning to work with deep learning yet,” Ganguli said. “That’s an interesting research direction.”

Until AI’s energy and data hunger is sated, training cutting-edge systems will remain astronomically expensive, and academic institutions may struggle to keep up. Stanford is taking this challenge seriously: earlier this year, they announced a new GPU cluster for researchers. But even without Big Tech-level compute resources, universities may still have a major role to play in advancing AI.

Jay McClelland, a professor of psychology and director of Wu Tsai Neuro’s Center for Mind, Brain, Computation and Technology, says that computational limitations might guide researchers toward promising questions. He is especially excited about attempts to reduce the size of gargantuan systems like today’s large language models so that they can be trained and run without the resources of a company like Meta. Such models, he says, wouldn’t just be more convenient and less costly: Because they are smaller and simpler, they might also be easier to understand, and the resulting insights could be transferable to the larger models. “There’s a huge role for research that reveals the essence of the solutions that neural networks find,” he says.

Academic culture makes that kind of research more possible that it might be in industry, according to Gwilliams. “The freedom to ask the question of, ‘Okay, why does the system work this way?’ or ‘Why does it do well?’—we have that freedom in academia,” she says.

Read about McClelland's 2024 "Golden Goose" Award — honoring his early research that informed today's AI boom.

And universities have something that no amount of money, or GPUs, can buy: freedom from the profit motive. “If you are in an industrial setting, if you’re working for open AI or Anthropic or Google, the Board of Directors and the CEO are asking you, ‘What are you doing for the bottom line?’ And they’re pressing you to make the models work for their corporate purposes, and they’re not trying to help us understand the brain,” McClelland says. “I am still in the university because I am fundamentally interested in understanding how the human brain works.”

Watch the entire 2024 Brains & Machines Symposium on our Youtube Channel