Project Summary

Abstract

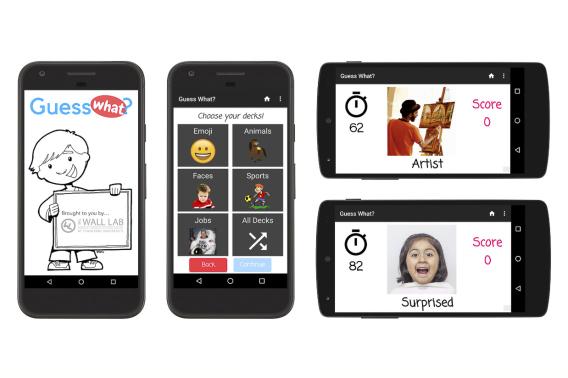

The rapid increase in the prevalence of autism has created a pressing need for translational bioinformatics solutions that can scale. Standard methods of care require laborious sessions at clinical facilities with waitlists that are several months long. New solutions must take the form of mobile health devices that are not restricted for use in clinical settings and that can complement or replace the current standards of care. We have invented a prototype mobile system (guesswhat.stanford.edu) that turns the focus of the camera on the child through a fluid social engagement with his/her social partner that reinforces prosocial learning while simultaneously measuring the child’s progress in socialization. GuessWhat challenges the child to imitate social and emotion-centric prompts shown on the screen of a smartphone held just above the eyes of the individual with whom the child is playing. Our system uses computer vision algorithms and emotion classifiers integrated into gameplay to detect emotion in the child’s face via the phone’s front camera to determine agreement with the displayed prompt, along with other features such as gaze, eye contact, and joint attention. During the course of this project, we will demonstrate that GuessWhat can not only potentially provide important therapeutic gains to target core deficits of autism, but also simultaneously generate data to support efficacy, progress tracking, and iterative machine-learning model enhancement for precision healthcare that individualizes to the child and his/her natural social partners.

Project Details

Program:

Funding Type:

Neuroscience:Translate Award

Award Year:

2019

Lead Researcher(s):