Ambitious brain recordings create unprecedented portrait of vision in action

When you view an identical object on different occasions — say your favorite coffee cup on different mornings — the way your brain represents the cup is never quite the same. This is one of the great mysteries of neuroscience: How does your brain reliably identify the object as being “the same” in the face of its constantly changing patterns of neural activity?

"Video recordings of the brain obtained in the study resemble a night sky — with neurons flickering on and off like twinkling stars." Video credit Ebrahimi et al, Nature 2022.

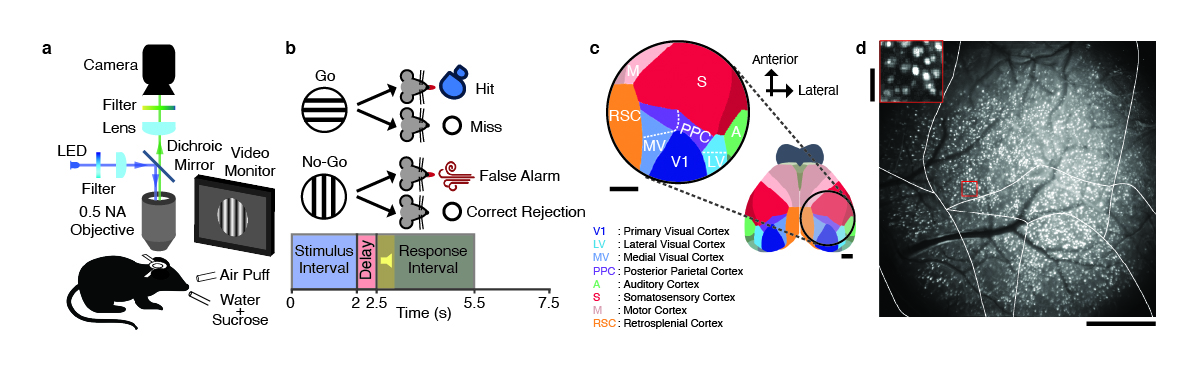

An unprecedented dataset is giving new insights into this and other neuroscience enigmas. Stanford scientists recorded the individual activity of thousands of neurons from eight different brain areas over several days, while animals repeatedly performed a visual discrimination task. What emerges is a detailed picture of how the brain processes visual cues — from perception to discrimination to behavioral response — a portrait that may have implications for technologies such as brain-computer interfaces and computer vision.

“This is the first study of vision in which neural activity has been recorded at cellular resolution across the entire visual cortex,” said Mark Schnitzer, professor of biology and applied physics, a member of the Wu Tsai Neurosciences Institute, and a Howard Hughes Medical Institute investigator. But the work raises as many questions as it answers, which is the point of exploratory work like this, said Schnitzer adding, “It’s like you’re landing on the moon. You’re getting the first glimpses of something, and you want to explore.”

Information flow through the brain

In this study, published in Nature on May 18, 2022, the Stanford scientists trained mice to lick a spout after being shown horizontal lines but not vertical lines; the visual cue was presented for two seconds. The mice performed this task hundreds of times per day over five days while the scientists recorded their brain activity. The mice were genetically engineered to express a fluorescent protein in a particular class of cortical neurons, such that when a neuron fires, it glows more intensely, allowing researchers to image its activity.

A figure from the research team's new study gives an overview of their approach to imaging the activity of thousands of neurons across multiple cortical areas during a visual discrimination task. Figure courtesy Sadegh et al, Nature 2022.

Video recordings of the brain obtained in the study resemble a night sky — with neurons flickering on and off like twinkling stars. By looking for statistical patterns in the glimmers, first author Sadegh Ebrahimi, a PhD student in electrical engineering, was able to detail the sequence of events that unfolds in the brain. Ebrahimi works in the Schnitzer lab and the lab of co-author Surya Ganguli, associate professor of applied physics. Ganguli is a member of the Wu Tsai Neurosciences Institute and associate director of the Stanford Institute for Human-Centered Artificial Intelligence (HAI).

Ganguli said, “We can trace the arc of information flow across these eight brain areas from sensory input to motor response, and we can watch the flow of information back and forth through the areas over time. Nobody has ever had the data to do that.”

0.2 seconds post-stimulus: A rise in redundancy

Immediately after each stimulus was presented, the researchers observed an initial flurry of neural activity, as if the brain was trying to make sense of the image. The researchers found something unexpected within the first 0.2 seconds: The number of neurons encoding the same information rose dramatically. The brain uses redundancy to help overcome the variability in individual neurons, also known as neural noise. But, until now, it wasn’t known that the amount of redundancy could shift dramatically within the same task.

Redundancy makes the signal more foolproof, but it’s inefficient. This trade-off might be worth it in the first moments of visual perception, Schnitzer explained. If someone shows you a cup, initially you need to take in all the visual details. But your brain quickly realizes “it’s a cup,” which is a more efficient representation.

Indeed, the team found that after 0.2 seconds, redundancy began to fall. This may indicate that the brain was forming a consensus: horizontal lines or vertical lines.

0.5 seconds post-stimulus: Structure in noise

Surprisingly, the computer models they used to interpret the signals — known as “decoders” — were robust to neural noise. Decoders trained on data from one day performed well when applied to data from subsequent days, despite substantial day-to-day fluctuations in the behavior of individual neurons. Starting at about 0.5 seconds, the neural representation of the stimulus became more stable. At this point, the researchers could discern whether an animal had viewed horizontal lines or vertical lines, based solely on the signals from its brain.

The team also discovered that day-to-day fluctuations in neural firing patterns were correlated with within-day fluctuations. “This has a lot of significance because it means that a decoder that is good on one individual day for taking into account that correlated variability will naturally be good across days,” Schnitzer said.The team found a possible explanation: The noise has structure. For decades, neuroscientists have recognized that brain noise is correlated; pairs of neurons tend to fluctuate in the same way. “They’re like lemmings. If one neuron fires a little bit more on a given trial, another neuron might fire a little bit more as well,” Ganguli explained. The significance of correlated noise is unclear, but the team discovered that their decoders were more accurate when they accounted for the correlated noise instead of ignoring it.

1.0 seconds post-stimulus: The global broadcast

At this point, the scientists could predict a mouse’s upcoming response, lick or no lick, solely based on its brain signals. The signals that encode the animal’s response were independent of those that encode the stimulus (horizontal or vertical lines), and they were broadcast to all eight brain regions including, surprisingly, the primary visual cortex.At about 1.0 seconds post-stimulus, the pattern of connectivity between different brain areas began to shift. “Initially, visual areas share information with other areas in specific channels,” Ebrahimi explained. “Then it appears that these brain areas come to a consensus about the animal’s response and broadcast this information to all the different brain areas.”

This finding adds to a growing body of work that suggests that the visual cortex does more than just vision. “There’s growing recognition that maybe these classical names for the brain areas are not so accurate,” Schnitzer said. For example, scientists have found signals for thirst, movement, sound, and reward all within the visual cortex.

From mouse brains to artificial intelligence

Many practical applications could emerge from these findings. The ability to build decoders that are robust across days could be a boon for the brain-computer interface community. Brain-computer interfaces are implantable devices that can discern a person’s intent solely by reading brain signals. Currently, scientists have to recalibrate these devices daily to account for day-to-day drifts in neural representations. This new work suggests that accounting for correlations in the noise might help solve this problem.

The study also reveals striking differences between how computer vision works and how the visual cortex works. Most computer vision systems receive visual data and return a classification: for example, cup. But what happens in the visual cortex is much more dynamic. Scientists may be able to borrow tricks from the brain, such as modulating redundancy, to improve the efficiency of computer vision systems. The work also hints at the potential importance of building computer vision systems that can integrate both visual and non-visual signals. In fact, Ganguli said, this may be a key first step toward building machines that are able to plan, imagine, and reason.

Citation: Sadegh Ebrahimi et al. “Emergent reliability in sensory cortical coding and inter-area communication.” Nature. Published online May 18, 2022. doi: 10.1038/s41586-022-04724-y

Other co-authors of the Nature paper are Jérôme Lecoq, of the Allen Institute for Brain Science, and Oleg Rumyantsev, Tugce Tasci, Yanping Zhang, Cristina Irimia, and Jane Li, of Stanford University.