Q&A: The tip of the iceberg - Building the next generation of neural prosthetics

Sergey Stavisky “fell hard” for brain computer interfaces (BCI) in a freshman neuroscience seminar at Brown University, the first time he saw a video of a paralyzed clinical trial subject control a computer cursor on a screen using a brain implant.

Seeing in the nascent field an opportunity to understand the workings of the mind and solve real-world medical problems at the same time, Stavisky majored in neuroscience, then took a job as a research engineer for John Donoghue and Leigh Hochberg’s pioneering BrainGate study, one of the first trials of implanted BCIs for people with tetraplegia, and the study that had originally inspired his passion for the field.

Stavisky took a temporary step away from human trials to earn his PhD with professor Krishna Shenoy at Stanford, where he studied how the brains of rhesus macaques incorporate new output channels into their motor systems and worked to improve neural prosthetic technology. But Stavisky soon returned to working directly with patients with paralysis as a Wu Tsai Neurosciences Institute Interdisciplinary Postdoctoral Scholar in Shenoy and prof. Jaimie Henderson’s Neuroprosthetics Translational Laboratory, which is also part of the BrainGate study.

In his postdoc, Stavisky successfully employed virtual reality in new ways as a direct feedback training tool to help paralyzed participants learn to operate prosthetic limbs intuitively with their minds. He also made the surprising discovery that a BCI listening in on the activity of neurons that normally control the hand and arm could also detect signals related to speech. This work re-oriented Stavisky’s research towards the exciting possibility of developing a robust neural speech prosthetic for patients who had lost the ability to speak due to paralysis or a disease such as ALS.

In 2021, Stavisky won the $50,000 Regeneron Prize for Creative Innovation, a competition sponsored by Regeneron Pharmaceuticals recognizing creativity and independent scientific thinking among postdocs in biology and medicine. That same year, he launched his own research lab as an assistant professor in the Department of Neurological Surgery at UC Davis, where he continues developing BCI technology to restore people’s capacity to speak, reach, and grasp and to better understand the neural computations that underlie those abilities.

The Wu Tsai Neurosciences Institute Interdisciplinary Scholar Awards provide funding to extraordinary postdoctoral scientists at Stanford University engaging in highly interdisciplinary research in the neurosciences.

Learn more >>

We spoke with Stavisky to learn more about his time at Stanford, his current research, and his plans for the future:

What attracted you to working on engineering movement and speech prosthetics for people with paralysis?

I fell hard for neuroprosthetics when John Donoghue, a pioneer in the field, gave a lecture to my freshman neuroscience course at Brown. He had started the first clinical brain computer interface (BCI) trial, called the BrainGate study, which showed that a person paralyzed with tetraplegia could move a cursor on a screen. I remember watching the video and thinking, “Oh my God, this is so cool — that person's moving something with his mind.”

Like most freshman, I was still trying to figure out what I wanted to do with my life. I was into big physical engineering projects — I once landed a rocket on my high school ’s roof — and as an avid gamer I had also gotten into writing software. Going into college, I wanted to understand how the mind works, but I also wanted to do research that would impact humanity.

Donoghue’s work was basically the Venn diagram center of my interests. Here was an application of neuroscience with a clinical translational need to help people with paralysis that also had a lot of straight-up engineering and software development. I was hooked!

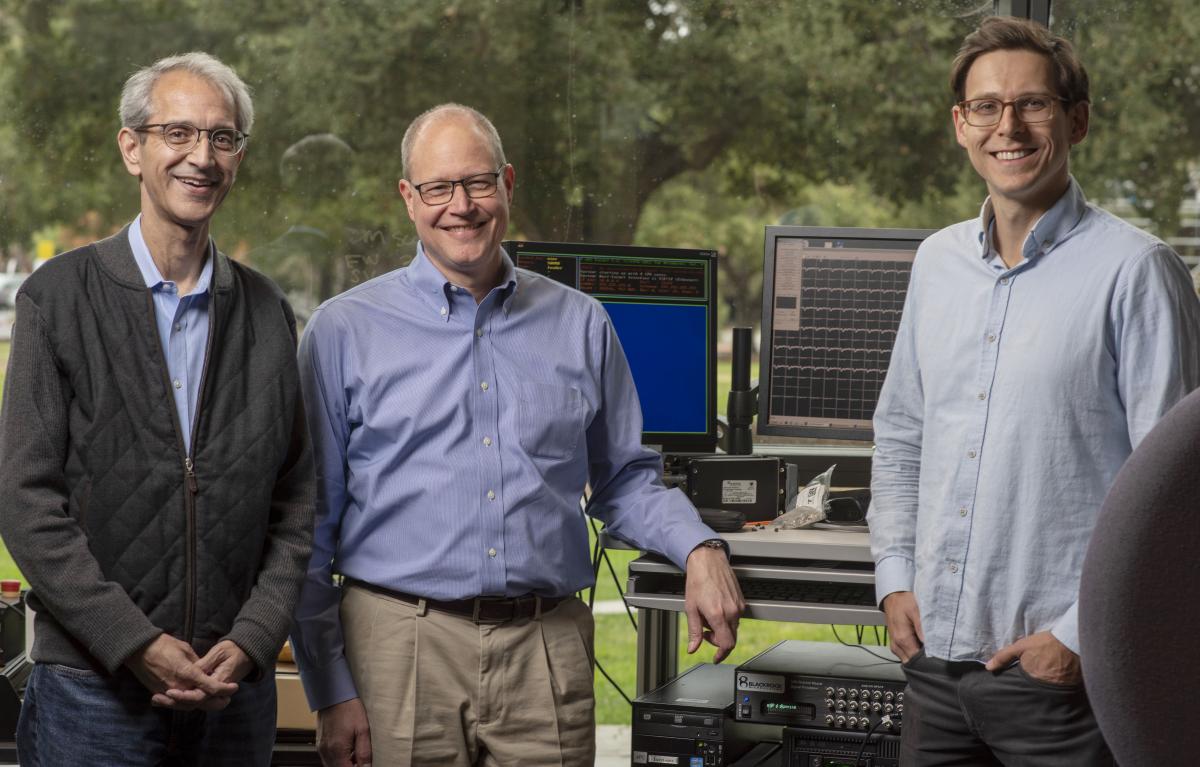

Stavisky (right) with Stanford mentors Krishna Shenoy (left) and Jaimie Henderson (center). Image credit: Peter Barreras/AP Images for HHMI.

Stavisky (right) with Stanford mentors Krishna Shenoy (left) and Jaimie Henderson (center). Image credit: Peter Barreras/AP Images for HHMI.

You worked with the ground-breaking BrainGate trial after graduating, but then you came to Stanford to work with Krishna Shenoy and Jaimie Henderson as a graduate student and then postdoc. What was going on in the lab at the time, and how did you contribute to that work?

When I joined Krishna and Jaimie’s group, the lab was starting to achieve impressive, 2-D cursor control for clinical trial subjects with neural interfaces; almost as good as an able-bodied person controlling a computer mouse. I thought maybe we could extend what the lab had already achieved to the more complex degrees of freedom needed to control a robotic limb.

Imagine reaching for a coffee cup; you need to decode the endpoint of the arm but also its rotation and the hand’s opening and closing. We started with virtual reality tasks and worked towards having patients move a physical robot arm.

In the end, this worked even better than I expected. Actually, it became clear that we were limited more by our robotics than by our ability to decode what the person wanted to do. So, about three years ago, I pivoted my research to focus on the newer field of speech prosthetics.

What was happening in speech research at the time?

Other groups had started to show that electrodes placed on the surface of the brain, called ECoG arrays, could decode words from brain activity, including activity that controls the muscles of the vocal tract. Well, I knew the high- density Utah arrays that are implanted in BrainGate participants are about an order of magnitude better than ECoG for controlling computer cursors or robotic arms, so I thought we might be able to use Utah arrays to make an even better speech prosthetic for people with paralysis.

Closeup of a Utah electrode array of the type used in the Braingate trial. Image credit: Peter Barreras/AP Images for HHMI.

Only problem was, our clinical trial was recording neural data from areas of the motor cortex that normally control arm and hand movement, not vocal tract muscles. Fortunately, Krishna and Jaimie let me try something unorthodox. Our clinical trial participants made speech samples while we recorded from our arrays already implanted in the hand- and arm-related regions. To my surprise, we got a robust speech-related signal.

This was a shocking result and very interesting basic science. It revealed that the traditional “motor homunculus” – the map of how motor cortex is organized--is just the tip of the iceberg. When we dive beneath with the higher-resolution Utah array and get access to the more subtle signals coming from neurons that are supposedly dedicated to the right hand, we see that they also modulate their activity when the person tries to move their leg, or their other arm, or even if they try to speak.

What’s more, the performance we got decoding speech using the Utah array was comparable to previous ECoG studies recording directly from speech motor cortex. This gave us confidence that if we implant these same arrays in the speech cortex we would have really excellent performance. That prospect is very exciting. Speech today is where the cursor BCI world was a decade ago, but I think speech will progress much faster than cursors did because of all of our experience. That’s the next chapter — to be continued.

Is that what you’ll be focusing on in your new lab at Davis?

Yes, our first priority will be speech. I have all this preliminary data from hand knob motor cortex suggesting that if we go to the ventral speech area, we could make a really good speech BCI.

We’ll be recruiting people who have lost the ability to speak due to stroke, or traumatic brain injury, and those who can still speak but are going to lose the ability, such as patients with early-stage ALS. My goal is ideally to have our first patient implanted by October 2022.

Once those experiments are underway, I plan to go back to the reach-and-grasp BCI and work with robotics experts to offload some of the low-level precision control to the robotic arm itself.

One big limitation for robot arms today is that participants can't feel what the arm is doing. If you think about how you grip a cup, there's a lot of sensory adjustment needed to not break or spill it. If I can't feel my hand, it's very hard to do that. I hope to use advances in smart robotics and AI to offload a lot of the fine-object manipulation to the robot so that it’s not something that a user really has to worry about.

We also anticipate moving to a head-mounted wireless system in the next couple of years and then going to fully wireless in maybe five. But that technology still needs to be fleshed out and to go through FDA.

What has been most meaningful to you about working with clinical trial participants?

It’s the opportunity to see the impact of my work in real time. One of my favorite memories from working with BrainGate participants at Stanford was one of the first times we had set one of our participants up to practice moving his robot arm. We'd set up all these foam blocks on a table for him to practice picking things up, but when we gave him the cue, he just moved this big robotic arm right through the pile — they all went on the floor. The participant just started laughing. He said, “This is so cool. I haven't knocked anything over accidentally in a decade!”

Later that day he was able to pick up the blocks successfully, but that first reaction is what stands out to me. I remember thinking, “Oh my god, I just made that happen.” I was in the lab all night for the past two weeks trying to code the algorithm to get this thing working and here it is.

What does your 2021 Regeneron Prize say about the importance of this field right now?

The field is going full exponential. It's nuts. Of course, the Elon Musk effect is strong. And a clinical trial participant fist bumping Obama with his robot arm got a lot of people’s attention, too.

It used to be that if you did a PhD in molecular neuroscience you could get a job at Genentech or a startup developing drugs, but if you were a systems or computational neuroscientist and wanted to go to industry, you had to become a data scientist at Netflix or Google or something. Now students can go straight from their PhD to helping a company build the BCIs that will help patients.

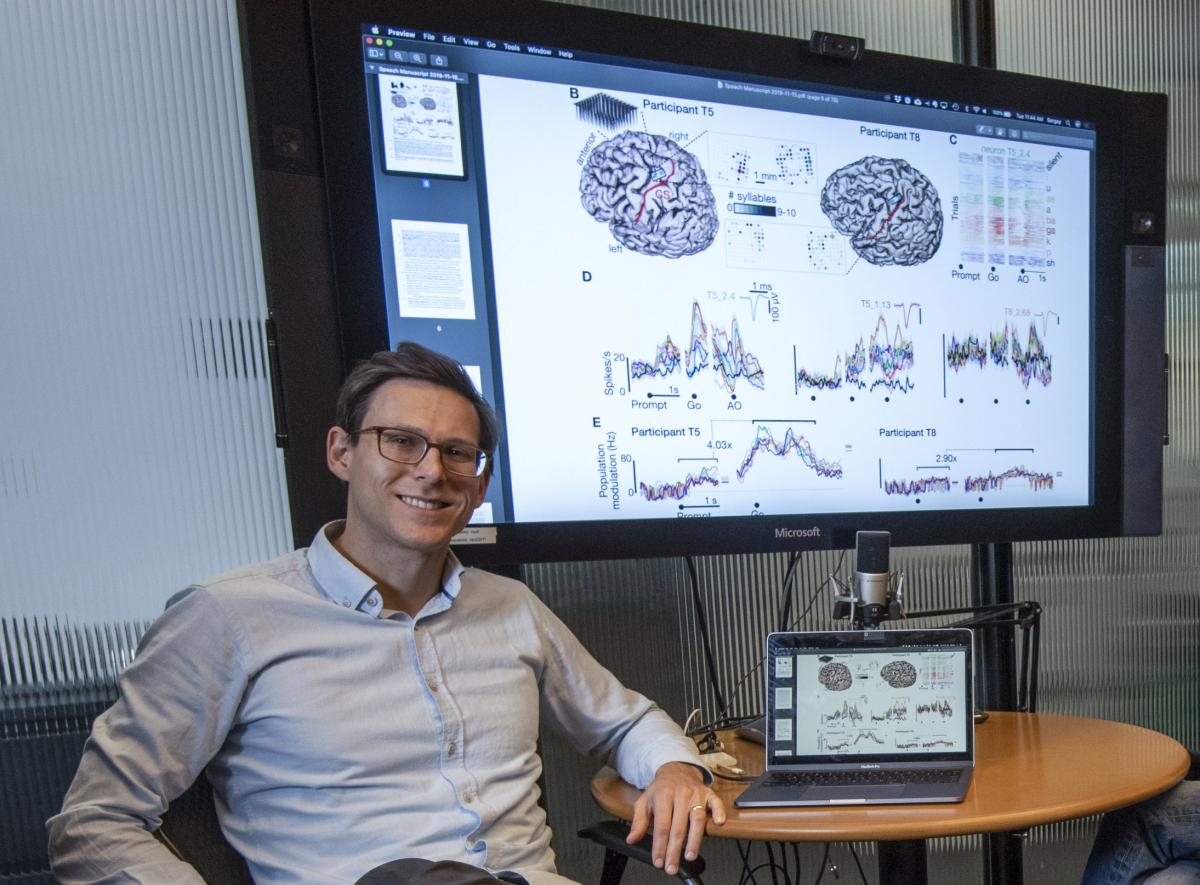

Stavisky presents his work on neural signals of speech. Image credit: Peter Barreras/AP Images for HHMI.

As for the Regeneron Prize, well, there has been such limited progress for patients with psychiatric and neurological disorders outside of surgery or pharmaceuticals. I think the prize reflects a growing excitement for adding a different approach to some of these problems, ones focused on neural circuits and engineering — for example using adaptive brain stimulation for disorders like epilepsy or major depression.

Brain-computer interface work falls into that framework. We can read out what a person wants to move or say then we can skip over the damaged part of the nervous system and recapitulate it through technology. This approach leverages a lot of amazing developments over the past decade or so in electronics, data science, AI, and machine learning. Now we can start using that progress to treat neurological injuries and disease.

Tell me about your postdoc experience– how did the interdisciplinary scholars award impact you?

It was amazing to do this work at Stanford, where I had access to clinical trial participants and could collect all this data. There were small things like the organized lunches, which fostered a real sense of community in the program, and the additional trainings, like the very helpful one teaching us how to give an elevator pitch. But what it really boils down to, is that Jaimie and Krishna are fantastic mentors. I've known from the beginning that my career development is their number one priority. It was amazing how much freedom they gave me to follow my hunches. We were an arm-and-cursor-control lab, but I was able to pitch Krishna and Jamie to look at speech activity. They were immediately like, “Of course, go ahead!” Not every institution or every PI would make time for a trainee’s crazy hunches. It won’t always be the case, but this time those hunches turned out to be right.